Year 2010 was when Socket.IO was born to facilitate real time communication through the usage of open connections. Socket.IO allows bi-directional communication between client and server. When a client has Socket.IO in the browser and a Socket.IO is integrated in the server then Bi-directional communications are enabled. JSON is one of the simplest form in which data can be sent. Socket uses Engine.IO for establishing connection and exchanging data between client and server.

A lot of time Socket.IO is equated with WebSockets. WebSockets is a browser implementation which allows bi-directional communication but Socket.IO does not always use WebSockets. Having said that it si generally the most preferred or better choice.

A chat app is the most basic way to explain how Socket.IO provides two-way communication. When the server receives a new message with Socket in place it sends it to the client(s) and notify them, bypassing the need to send requests between client and server.

At Tecziq we use Socket for a lot of project that require real time communication. Socket.IO is also used in our ready to use Live Streaming application. The application has one to many, one to one streaming as well as chatting feature. It is a white labelled solution that you can use with your branding.

Socket.io – The Pros and Cons

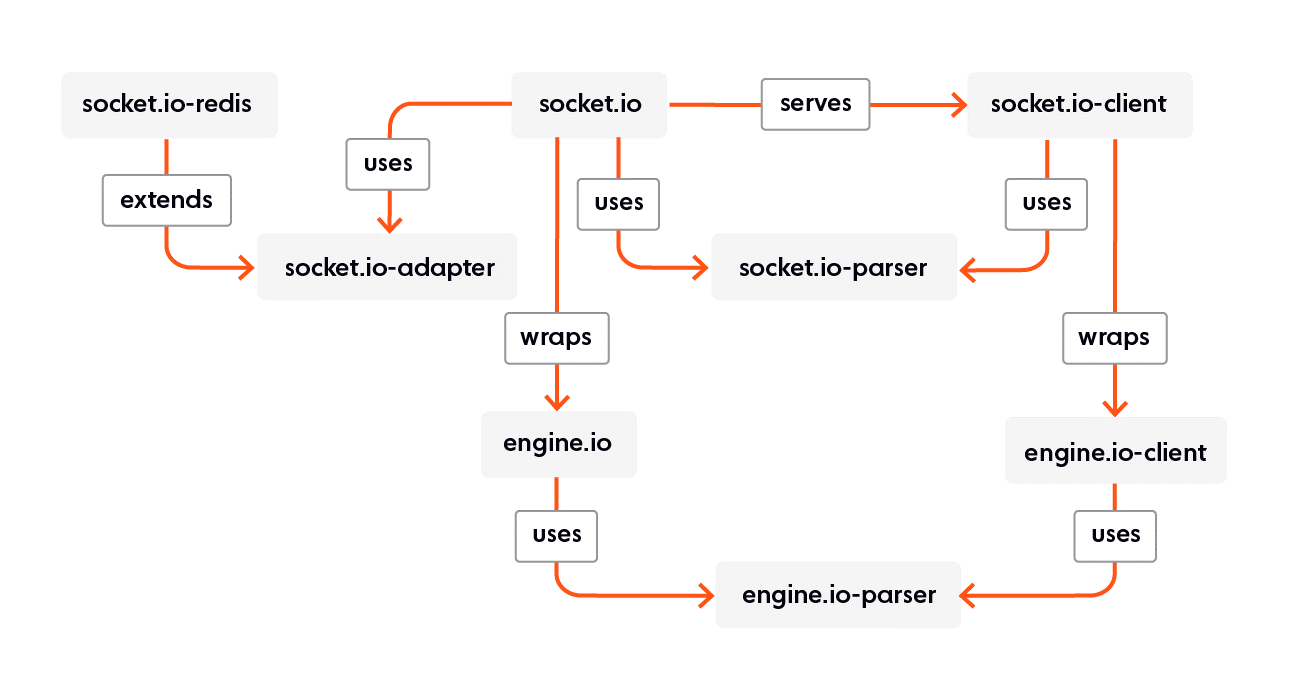

Recently with all major browsers supporting WebSockets, it is being said that there’s no need for Socket.io anymore based on the assumption that what Socket.io only offers is fallback mechanism to long polling when WebSockets are not available. This is not completely true because the fallback to long polling is provided by a protocol called Engine.io and not Socket.io, Socket.io sits on top of Engine.io. Engine.io basically provides:

- Multiple underlying transports (WebSockets and long polling), which are able to deal with disparate browser capabilities and also able to detect and deal with disparate proxy capabilities, with seamless switching between transports.

- Liveness detection and maintenance.

- Reconnection in the case of errors.

Engine.io implements layer 5 of the OSI model (the session layer), while Socket.io implements layer 6, the presentation layer.

Even with the wide support of WebSockets now, it only makes half of the first point above redundant — it doesn’t address proxies not supporting WebSockets (this can somewhat be worked around by using TLS), add to it that it doesn’t have any mechanism for liveness detection (yes, WebSockets support ping/pong but there’s no way to force a browser to send pings or monitor for liveness, so the feature is useless), and browser WebSocket APIs do not have inbuilt reconnection features.

Socket.io provides three main features:

- Multiplexing of multiple namespaced streams down a single engine.io session.

- Encoding of messages as named JSON and/or binary events.

- Acknowledgment callbacks per event.

Let’s look into the above three features in more detail.

Namespaces

One of the great features of Socket.io is multiple namespaces. In case of an application where there are a lot of push communications going back and forth with the server, then your application will sometime need to view logs, sometime it will need to view state changes and sometime it will need to view events. These are rendered in different components and should be kept separate, their lifecycles are separate, backend implementations of the streams are separate, etc. What you don’t want is one WebSocket connection to the server for each of these streams, as that will drastically increase the number of WebSocket connections. What you need is to multiplex all into one connection but both client and server treat them as if they were separate connections, able to start and stop independently. This is what Socket.io allows.

If Socket.io did not provide this feature and you wanted to multiplex multiple streams into one then you would have to encode your multiplexing protocol, and then on the server side, you would have to carefully manage these messages, ensuring that subscriptions are cleanly cleaned up. There is often a lot more to making this work cleanly than you might think.

Socket.io pushes all the above mentioned complex work into the client/server libraries so that you as the application developer can just focus on your business problem.

Event Encoding

There are two important advantages to event encoding. The first one is that when naming of events is encoded by the protocol itself then the libraries that implement the protocol are able to understand your events in a better way, and provide features on top of this. This is not a very important advantage as callbacks are a not a very good way to deal with streams of events communicated over the network.

Second and a bigger advantage is that it allows the event name to be independent of the actual encoding mechanism, a particularly important mechanism for binary messages. It’s easy to put the event name inside a JSON object, but what happens when you want to send a binary message? You could encode it inside the JSON object, but that requires using base 64 and is inefficient. You could send it as a binary message, but then how do you attach metadata to it like what the name of the event is? You’d have to come up with your own encoding to encode the name into the binary along with the binary payload. Socket.io does this for you (it actually splits binary messages into multiple messages, a header text message that contains the name, and then a linked binary message).

Callback-Centric & No Message Guarantee

As mentioned previously Socket.io being callback centric is not an advantage. Downside of callback is that it will not provide your application with backpressure and message guarantees. If there are a lot of asynchronous tasks, then server can be overwhelmed as it will get events at a rate higher than its processing rate which can cause the server to run out of memory or CPU. Similarly, when sending events to from server to client if the events from server are being produced too quickly for the client, it can run out of memory buffering them.

One thing that needs to be understood is that implementation of Socket.io uses callbacks or streams is a concern from implementation perspective and not exactly something to do with Socket.io. If stream based implementation is done like through the usage of Akka to send evens and using ngrx to handle events at client side then backpressure can be supported.

There is no mechanism in Socket.io to offer message guarantees. The acknowledgments feature of Socket.io can be used but that will require the server to track what messages have been received. Kafka like approach is a better option wherein the client is responsible for its own tracking by tracking message offsets, and then when a stream is resumed, after a disconnect for whatever reason, the open message sent to the namespace would include the offset of the last message it received. This allows the server to resume sending messages from that point. This feature is built into Server Sent Events.

Maintaining & Operating Socket.IO

Getting started with Socket.IO is quite simple and all you need is a Node.js server to run it on, that is if you are just getting started with a realtime application for a limited number of users. It starts getting complex when you are looking at scaling the application. We look into some of the issues or complexities that you can face during scaling.

Asynchronous networking libraries is what Socket.IO is built on. When it comes to maintaining connections to users as well as sending and receiving communication it starts to put strain on the server. Add to this when client(s) start to send substantial amount of data, the data is streamed in chunks and will require freeing up resources for transmitting data. Higher number of users mean your server reaches its max capacity and you need to split connections over multiple servers, if not then you will loose on the communications. Having said that, it is not as straight forward as just adding a new server. Sockets work on the concept of an open connection between a server and client. Now a particular server only identifies with clients connected to it directly but not those clients that are connected to other servers.

As mentioned the solution is not straightforward but the solution is there. What you require is having a pub/sub store (e.g. Redis). This pub/ sub store will solve the problem by notifying all the servers what needs to be done, only this means you will need to have an additional database and a server for the database.

You can use an adapter socket.io-adapter which works with pub/sub store and servers to share the information. You can either write your own implementation of this adapter or you can use the one provided for Redis.

Websockets keep their connection open and in case the connection goes back to polling then what you have is multiple requests during the lifetime of the connection. In case any one of the requests goes to a different server you will receive an error – “Error during WebSocket handshake: Unexpected response code: 400”. This can be handled by routing clients based on their originating address, or a cookie. Socket.IO have some great documentation on how to solve this for different environments.

Conclusion

For streaming, chatting or wherever an application is looking for open two way communication, Socket.io is a very useful and the widespread support for WebSockets does not make it reduntant. One of the strongest feature of Socket.io is namespacing.

Multi-argument and acknowledgment features are points for which alternate solutions are there and those should be used. Using plain single argument events will allow to integrate well with any reactive streaming technology that you can use and allows you to use causal ordering based tracking of events to implement messaging guarantees.

At Tecziq we make it easy to efficiently design, develop, support and scale real-time applications. Our streaming and chat application delivers millions of realtime messages and streams to thousands of users for a lot of organizations.

If you are looking to develop a new realtime solution or if you have an application that need either to be maintained or upgraded we are available to discuss and suggest the best approach for the same. You can connect with us on sales@tecziq.com and info@tecziq.com and one of our experts will get in touch with you to discuss how we can collaborate.